Servers in stock

Checking availability...

-

Dzięki niskim opóźnieniom procesory TPU nadają się do zastosowań wymagających prognoz w czasie rzeczywistym, takich jak systemy rekomendacji i systemy wykrywania oszustw.

Układy TPU są zoptymalizowane pod kątem trenowania złożonych modeli, takich jak GPT-4 i BERT, co pozwala na skrócenie czasu i obniżenie kosztów trenowania.

Naukowcy akademiccy i korporacyjni wykorzystują procesory TPU do takich zadań, jak modelowanie klimatu i symulacje fałdowania białek, czerpiąc korzyści z ich mocy obliczeniowej i wydajności.

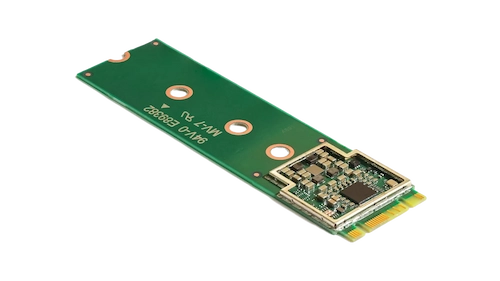

Ten kompaktowy akcelerator usprawnia uczenie maszynowe na urządzeniu, umożliwiając wnioskowanie z dużą prędkością przy niskim zużyciu energii.

Dzięki integracji Coral M.2 Accelerator z systemem możesz osiągnąć wydajne przetwarzanie uczenia maszynowego w czasie rzeczywistym bezpośrednio na urządzeniu, zmniejszając opóźnienia i konieczność korzystania z obliczeń w chmurze.

Procesor Hailo-8 Edge AI zapewnia do 26 teraoperacji na sekundę (TOPS) w kompaktowej obudowie mniejszej niż grosz, wliczając w to pamięć.

Jego architektura, zoptymalizowana pod kątem sieci neuronowych, umożliwia wydajne, głębokie uczenie się w czasie rzeczywistym na urządzeniach brzegowych przy minimalnym zużyciu energii, dzięki czemu idealnie nadaje się do zastosowań w motoryzacji, inteligentnych miastach i automatyce przemysłowej.

Taka konstrukcja umożliwia wydajne przetwarzanie sztucznej inteligencji na brzegu sieci, jednocześnie redukując koszty i zużycie energii.

Procesory TPU zaprojektowano specjalnie do obliczeń wymagających dużej ilości macierzy. Zapewniają one krótszy czas szkolenia i wnioskowania w porównaniu z tradycyjnymi procesorami GPU.

Umożliwia rozproszone szkolenie w wielu jednostkach. Ta skalowalność jest kluczowa dla efektywnego szkolenia dużych modeli.

Obsługa głównych frameworków uczenia maszynowego, w tym TensorFlow, PyTorch (poprzez OpenXLA) i JAX, co pozwala na bezproblemową integrację z istniejącymi przepływami pracy.

Procesory TPU są zintegrowane z usługami takimi jak Google Kubernetes Engine (GKE) i Vertex AI, co ułatwia koordynację i zarządzanie obciążeniami AI.