Servers in stock

Checking availability...

With low-latency capabilities, TPUs are suitable for applications requiring real-time predictions, such as recommendation engines and fraud detection systems.

TPUs are optimized for training complex models like GPT-4 and BERT, reducing training time and cost.

Academic and enterprise researchers utilize TPUs for tasks like climate modeling and protein folding simulations, benefiting from their computational power and efficiency.

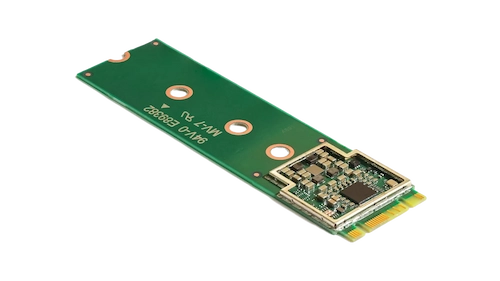

This compact accelerator enhances on-device machine learning by enabling high-speed inferencing with low power consumption.

By incorporating the Coral M.2 Accelerator into your system, you can achieve efficient, real-time machine learning processing directly on the device, reducing latency and reliance on cloud-based computations.

The Hailo-8 edge AI processor delivers up to 26 tera-operations per second (TOPS) in a compact form factor smaller than a penny, including its memory.

Its architecture, optimized for neural networks, enables efficient, real-time deep learning on edge devices with minimal power consumption, making it ideal for applications in automotive, smart cities, and industrial automation.

This design allows for high-performance AI processing at the edge while reducing costs and energy usage.

TPUs are purpose-built for matrix-heavy computations, delivering faster training and inference times compared to traditional GPUs.

Enables distributed training across multiple units. This scalability is crucial for training large models efficiently.

Support major machine learning frameworks, including TensorFlow, PyTorch (via OpenXLA), and JAX, allowing seamless integration into existing workflows.

TPUs are integrated with services like Google Kubernetes Engine (GKE) and Vertex AI, facilitating easy orchestration and management of AI workloads.