Nvidia A100 H100 configurations, perfect for AI, deep learning, visualization and high-performance computing.

NVMe disks

24/7 support

Unbeatable prices

5 minutes deployment

Nvidia A100 H100 configurations, perfect for AI, deep learning, visualization and high-performance computing.

NVMe disks

24/7 support

Unbeatable prices

5 minutes deployment

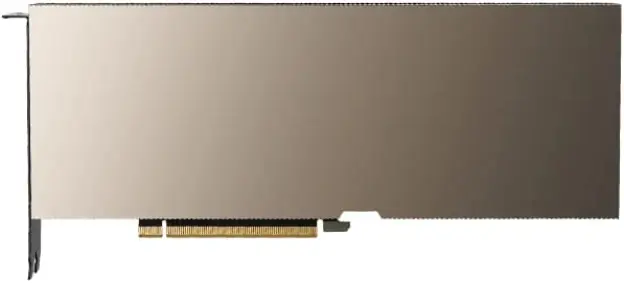

NVIDIA A100 GPU

| NVIDIA A100 specs: | |

|---|---|

| Video memory capacity | 40GB / 80GB HBM2 |

| CUDA cores | 6912 pcs. |

| Max Bandwidth | 1.6 TB/s |

| The Nvidia A100 GPUs offers the performance, scalability, and efficiency necessary for AI and deep learning applications, making it an excellent option for businesses and researchers looking for cutting-edge computational power. | |

Ampere infrastructure

With 54 billion transistors, the NVIDIA Ampere architecture is one of largest 7 nanometer chips ever built.

High-bandwidth memory (HBM2)

HBM2 is designed to offer quick and effective data access, with memory bandwidth of up to 1.6 TB/s.

AI and Deep Learning

The Nvidia A100 is specifically designed for artificial intelligence and deep learning applications, delivering up to 20 times the performance of previous-generation GPUs.

Ray tracing

Experience real-time ray tracing, ideal for demanding visualization tasks. The A100 GPU delivers the rendering power needed for realistic and immersive visuals.

NVLink support

Utilize the capabilities of 3rd-generation NVLink for lightning-fast data transfers up to 10x to 20x faster than PCI-Express.

Multi-interface GPU

Empower each A100 GPU to simultaneously run seven distinct and isolated applications or user sessions.

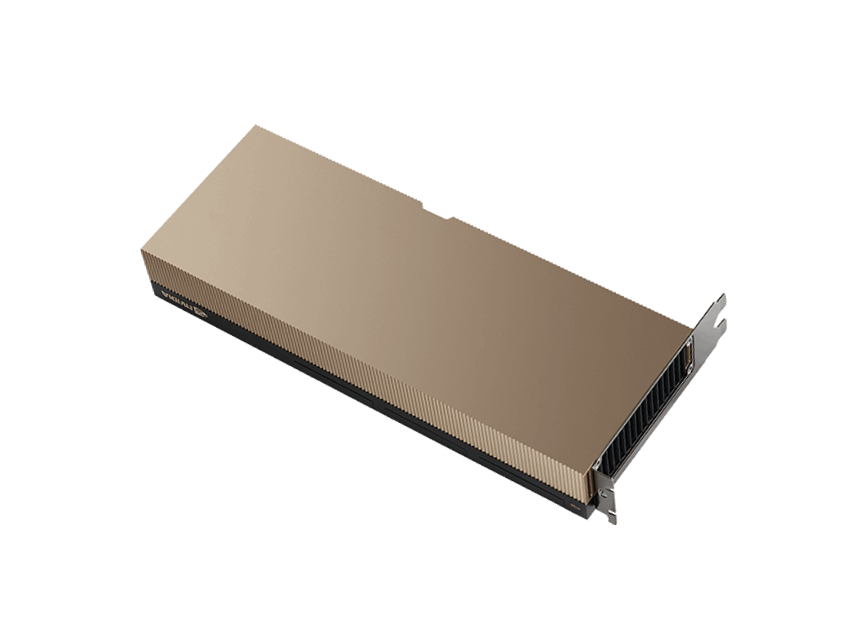

NVIDIA H100 GPU

| NVIDIA H100 specs: | |

|---|---|

| Video memory capacity | 80GB HBM3 |

| CUDA cores | 8448 pcs. |

| Max Bandwidth | 3 TB/s |

| The latest released NVIDIA H100 GPU offers unprecedented performance, scalability, and security for various workloads. It is at least two times faster than its predecessor, the A100 GPU. | |

Hopper infrastructure

H100 GPUs deliver outstanding performance in HPC applications, 7x higher performance compared to A100 GPU.

Connectivity

Up to 256 H100 GPUs can be connected using the NVIDIA NVLink Switch System, allowing exascale tasks to be accelerated.

AI training

The H100 GPU stands as the fourth generation of the most advanced AI system, delivering exceptional performance capabilities.

Multi-interface GPU

The H100 features second-generation MIG technology, which allows each GPU to be securely partitioned into up to seven separate instances.

Type

Deployment

Location

Pricing

Hardware

Processor(s)

GPU(s)

Memory

Storage

OS

Bandwidth

Type

Deployment

Location

Pricing

Hardware

Processor(s)

GPU(s)

Memory

Storage

OS

Bandwidth

Sort by:

Loading servers...

Why Primcast?

Fast delivery

Instant A100 configurations are delivered within 5 minutes, with your verified payment.

99.9% sla

Your GPU dedicated server is backed by an industry-leading, with 99.9% uptime SLA.

24/7 support

Live GPU cloud experts are available via live chat, phone and email.

Data centers

Deploy your NVIDIA A100 dedicated server from New York, Miami, San Francisco, Amsterdam or Bucharest.

Deploy your A100 H100 bare-metal cloud server in minutes!

Get started