Servers in stock

Checking availability...

-

Düşük gecikme yetenekleri sayesinde TPU'lar, öneri motorları ve dolandırıcılık tespit sistemleri gibi gerçek zamanlı tahminler gerektiren uygulamalar için uygundur.

TPU'lar, GPT-4 ve BERT gibi karmaşık modellerin eğitimi için optimize edilmiştir, bu sayede eğitim süresi ve maliyeti azalır.

Akademik ve kurumsal araştırmacılar, iklim modellemesi ve protein katlama simülasyonları gibi görevlerde TPU'ları kullanarak hesaplama gücünden ve verimliliğinden yararlanmaktadırlar.

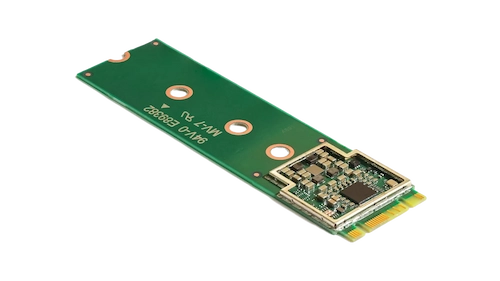

Bu kompakt hızlandırıcı, düşük güç tüketimiyle yüksek hızlı çıkarımlara olanak sağlayarak cihaz içi makine öğrenimini geliştirir.

Coral M.2 Accelerator'ı sisteminize entegre ederek, doğrudan cihazda verimli, gerçek zamanlı makine öğrenimi işlemlerini gerçekleştirebilir, gecikmeyi ve bulut tabanlı hesaplamalara olan bağımlılığı azaltabilirsiniz.

Hailo-8 edge AI işlemcisi, belleği de dahil olmak üzere, bir peniden daha küçük kompakt bir form faktöründe saniyede 26 tera-işlem (TOPS) sağlıyor.

Yapay sinir ağları için optimize edilmiş mimarisi, minimum güç tüketimiyle uç cihazlarda verimli, gerçek zamanlı derin öğrenmeye olanak tanır ve bu da onu otomotiv, akıllı şehirler ve endüstriyel otomasyon uygulamaları için ideal hale getirir.

Bu tasarım, maliyetleri ve enerji kullanımını azaltırken, uçta yüksek performanslı yapay zeka işleme olanağı sağlıyor.

TPU'lar, geleneksel GPU'lara kıyasla daha hızlı eğitim ve çıkarım süreleri sunarak, matris ağırlıklı hesaplamalar için özel olarak tasarlanmıştır.

Birden fazla birime dağıtılmış eğitim olanağı sağlar. Bu ölçeklenebilirlik, büyük modellerin verimli bir şekilde eğitilmesi için hayati önem taşır.

TensorFlow, PyTorch (OpenXLA aracılığıyla) ve JAX dahil olmak üzere önemli makine öğrenimi çerçevelerini destekleyerek mevcut iş akışlarına sorunsuz entegrasyon sağlayın.

TPU'lar, Google Kubernetes Engine (GKE) ve Vertex AI gibi hizmetlerle entegre edilerek, yapay zeka iş yüklerinin kolayca düzenlenmesi ve yönetilmesini kolaylaştırır.